How to manipulate live stream content in WebRTC is one of the most asked question to us. This could be achieved by using canvas as live stream source in WebRTC at Ant Media Server. Of course, questions are asked in different ways. Here are the some sample questions:

- How can I put my logo to live stream?

- How can I put watermark to my live stream?

- My live stream doesn’t have video, can I display a static image instead of video?

In this blog post, we will show 3 use cases of using canvas as live stream source:

- Putting logo to live stream

- Canvas with audio

- Putting a background image to live stream

Let’s start with the first one.

Putting Logo to Live Stream

In this part, we will use the publish stream sample that comes with the Ant Media Server installation. The idea behind is using canvas as a stream source. We will draw two things on the canvas. The first one is the content of video component and the second one is the logo image. The logo image in our sample is a PNG file.

Firstly put a canvas component above video component:

<div class="col-sm-12 form-group"> <canvas id="canvas" width="200" height="150"></canvas> <p> <video id="localVideo" autoplay muted controls playsinline></video> </p> </div>

Then use this canvas component for merging the logo image and video frame. First, create an Image instance from the PNG file. Second, create a draw method where you merge the video frame and logo image. Call this method in every 25ms with the help of setInterval. Last, capture the canvas content with 25fps using the captureStream method:

var canvas = document.getElementById('canvas'); var vid = document.getElementById('localVideo'); var image=new Image(); image.src="antmedia.png"; function draw() { if (canvas.getContext) { var ctx = canvas.getContext('2d'); ctx.drawImage(vid, 0, 0, 200, 150); ctx.drawImage(image,50, 10, 100, 30) } } //update canvas for every 25ms setInterval(function() { draw(); }, 25); //capture stream from canvas var localStream = canvas.captureStream(25);

Finally, initialize WebRTC adaptor with the localStream created from the canvas. Here, we provide localStream to WebRTCAdaptor:

//get audio with getUserMedia

navigator.mediaDevices.getUserMedia({video: true, audio:true}).then(function (stream) {

var video = document.querySelector('video#localVideo');

video.srcObject = stream;

video.onloadedmetadata = function(e) {

video.play();

};

//initialize the webRTCAdaptor with the localStream created.

//initWebRTCAdaptor method is implemented below

initWebRTCAdaptor(localStream);

});

Canvas with Audio

In this sample, we will show how to publish only canvas with audio. There will not be video content. This might be useful when you publish only audio, i.e radio station website.

In order to achieve this, we will skip drawing the video content and we will draw a rectangle on the canvas instead of a logo image file. We will use drawings to show that you can do more than putting a logo. You can also write a text on the canvas.

For this sample, we need to change the content of the draw method. Instead of drawing an image, we draw a rectangle:

function draw() {

if (canvas.getContext) {

var ctx = canvas.getContext('2d');

ctx.fillStyle = 'rgba(0, 0, 200, 0.5)';

ctx.fillRect(30, 30, 100, 50);

}

}

Then we need to disable video and add only audio to localStream:

//get audio with getUserMedia

navigator.mediaDevices.getUserMedia({video: false, audio:true}).then(function (stream) {

//add audio track to the localstream which is captured from canvas

localStream.addTrack(stream.getAudioTracks()[0]);

//initialize the webRTCAdaptor with the localStream created.

//initWebRTCAdaptor method is implemented below

initWebRTCAdaptor(localStream);

});

Now when you play it, you will see a live stream with a rectangle and audio.

Putting a Background Image to Live Stream

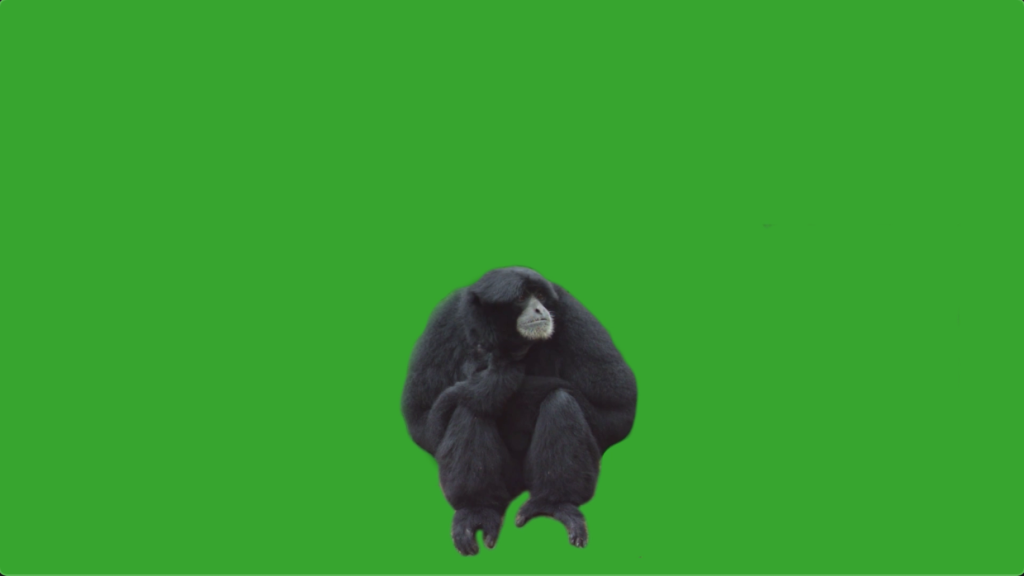

This use case is also known as the green-screen effect. You could live stream using a green screen and replace that green screen with something else. For example, you can replace that green screen with a background image that you will choose. Let’s see how we do this.

We start by adding two more canvas components. The first canvas is for a video frame, the second one is for a background image that we will use in our live stream as a background and the third one is for merging these canvas:

<canvas id="canvas" width="200" height="150"></canvas> <canvas id="canvas2" width="200" height="150"></canvas> <canvas id="canvas3" width="200" height="150"></canvas>

var canvas = document.getElementById('canvas');

var canvas2 = document.getElementById('canvas2');

var canvas3 = document.getElementById('canvas3');

var vid = document.getElementById('localVideo');

var image=new Image();

image.src="antmedia.png";

function draw() {

if (canvas.getContext) {

var ctx = canvas.getContext('2d');

var ctx2 = canvas2.getContext('2d');

var ctx3 = canvas3.getContext('2d');

ctx2.drawImage(image,0, 0, 200, 150)

let frame2 = ctx2.getImageData(0, 0, 200, 150);

ctx.drawImage(vid, 0, 0, 200, 150);

let frame = ctx.getImageData(0, 0, 200, 150);

let l = frame.data.length / 4;

for (let i = 0; i < l; i++) {

let r = frame.data[i * 4 + 0];

let g = frame.data[i * 4 + 1];

let b = frame.data[i * 4 + 2];

if (g > 100 && r > 100 && b < 43){

frame.data[i * 4 + 0] = frame2.data[i * 4 + 0]

frame.data[i * 4 + 1] = frame2.data[i * 4 + 1]

frame.data[i * 4 + 2] = frame2.data[i * 4 + 2]

}

}

ctx3.putImageData(frame, 0, 0);

}

}

//update canvas for every 40ms

setInterval(function() { draw(); }, 25);

//capture stream from canvas

var localStream = canvas3.captureStream(25);

//get audio with getUserMedia

navigator.mediaDevices.getUserMedia({video: true, audio:true}).then(function (stream) {

var video = document.querySelector('video#localVideo');

video.srcObject = stream;

video.onloadedmetadata = function(e) {

video.play();

};

//initialize the webRTCAdaptor with the localStream created.

//initWebRTCAdaptor method is implemented below

initWebRTCAdaptor(localStream);

});

Useful Links

You can try Ant Media Server for free with all features!

You can download native Android and iOS WebRTC SDKs and integrate them into your applications for free!

Ant Media Server Github Wiki

You would want to check RTMP to WebRTC Migration – RTMP is Dying!, Adventure Continues: CMAF is available in Ant Media Server v2.2