We at DirectAI are excited to unveil computer vision enabled live video through Ant Media.

Real-time object tracking promises transformative capabilities for businesses, from augmented robotics perception stacks to quality checks in production lines. Yet, the complexity of implementing such technology, especially for teams that lack machine learning as a core focus, is often a significant barrier.

Moreover, existing solutions struggle to balance performance with iteration speed, presenting significant challenges for real-time applications. They often require significant upfront investment through data assembly & training or only work on a specific set of objects.

To address this, DirectAI, in collaboration with Ant Media, is revolutionizing how businesses extract information from live video streams, democratizing access to powerful, easy-to-implement computer vision technology.

With Ant Media Server, DirectAI can rapidly innovate and deploy AI-powered applications while more effectively managing cost model training for live video.

Ant Media’s custom server is the foundation for video applications in 130+ countries around the world. Complementing this, DirectAI enables rapid deployment of models defined in plain language, turning them into actionable insights within seconds.

DirectAI’s tech is specifically designed to meet the stringent latency and throughput demands of live video applications.

Together, Ant Media and DirectAI provide a unique and powerful solution, transforming the way businesses utilize information in live video streams, regardless of their machine learning expertise or resource availability.

Building Next-Generation Computer Vision

DirectAI has built a system that skips the model training process entirely through large Vision-Language Models trained on billions of image-caption pairs. All you need to build a model is a plain-language description (e.g. “hat”).

We work with frames – either examining them in sequence to track objects across time, detecting sets of objects in an individual image, or categorizing images into classes.

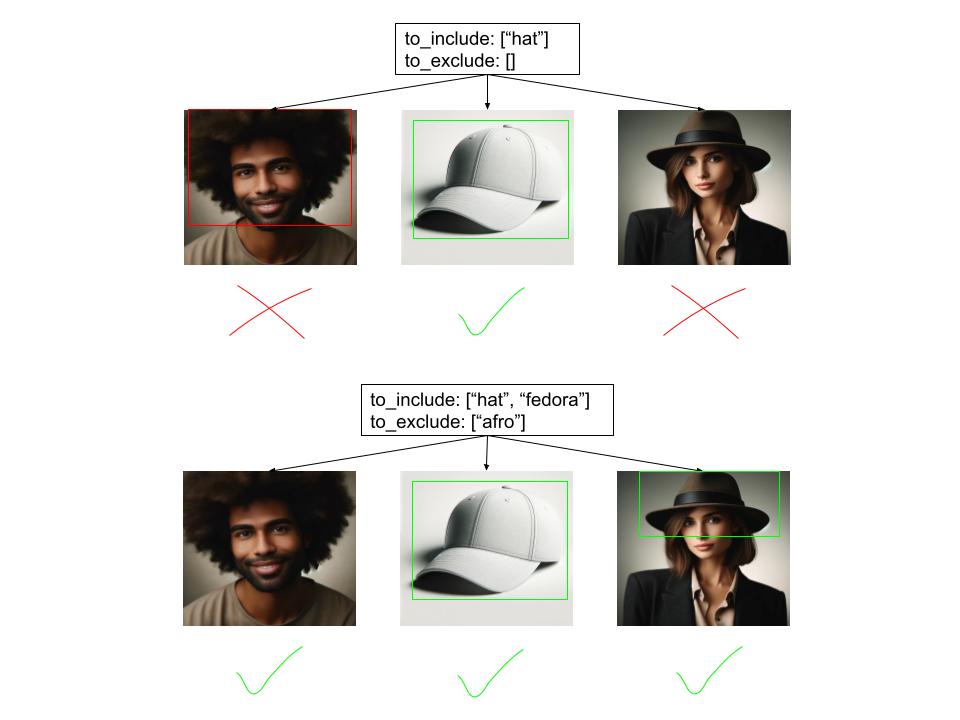

And DirectAI isn’t just using off-the-shelf machine learning. Our proprietary methods enable users to not only define objects in text, but also iterate quickly through edge-case definitions. If the “hat” model fails to detect “fedora” in testing, simply add “fedora” to the “positive” list. If the “hat” model mistakes an “afro” for a hat, simply add “afro” to the “negative” list. And if our model doesn’t understand your text description, you can just show it a single example!

DirectAI & Controllability

Ant Media has made the partnership process incredibly smooth. The Ant Media marketplace enables applications that have been built on top of the Ant Media Server to be monetized.

One of DirectAI’s early customers needed custom software built on top of their Ant Media server, so releasing that same work as a plugin to the public was a natural choice. What’s more, Ant Media has open-sourced a number of simple plugin templates that enabled DirectAI to extract and export stream frames for inference.

Check out a demo of DirectAI’s plugin below:

Why Computer Vision Matters for Your Application

DirectAI’s technology offers a significant breakthrough for teams that would otherwise be unable to implement computer vision due to engineering capacity or data constraints. There are a number of real world problems we’re excited to help tackle, namely:

- Identifying obstacles or objectives for robots/drones

- Identifying harmful material (e.g. weapons or nudity) in a trust & safety context

- Detecting defects on manufacturing lines

- Anonymous counting people

- Indexing video for search

We’d love to talk to you about integrating computer vision into your Ant Media stack. Please schedule time on our calendar or reach out to ben@directai.io so we can fast track your onboarding. Our Ant Media Marketplace listing is linked here.

If you want to learn more about DirectAI’s internal technology, feel free to check out our publicly accessible classification/detection API and quickstart repo.

About the Ant Media Server deployment on AWS

Ant Media provided a robust solution leveraging AWS’s purpose-built Media & Entertainment capabilities. Key integrations included:

- Amazon EC2: Utilized for secure, scalable compute capacity, ensuring high performance and reliability.

- AWS CloudFormation: Rapid deployment of streaming clusters, allowing Ant Media Server to scale according to demand.

- WebRTC Streaming: Enabled ultra-low latency broadcasts crucial for live news delivery.

- Adaptive Bitrate Streaming: Ensured optimal viewing experiences across varying network conditions.

Key Results and Benefits, included:

- Latency Reduction: Achieved sub-0.5 second delays.

- Scalability: Effortlessly supported viewer numbers from a few to hundreds of thousands.

- Cost Efficiency: Reduced IT costs by 30% through flexible AWS pricing models.

- Viewer Engagement: Enhanced real-time viewer experience with high-quality broadcasts.

“By working with DirectAI, we’ve learned the importance of seamless integration and real-time processing in computer vision applications. The streaming capabilities provided by Ant Media have significantly enhanced DirectAI’s ability to build and deploy powerful computer vision models using plain language, requiring no code or training. Key metrics that matter to us include sub-0.5 second latency and the ability to scale efficiently to meet varying demands.” – Alper Kızıltoprak, CSO, Ant Media.

Visit the Ant Media Server on AWS Documentation for information about Ant Media Server deployment on AWS.

About Ant Media and AWS Collaboration

Ant Media actively participates in the AWS Partner Network (APN), including programs like AWS Customer Engagement and funding and credits to support customers’ Proof of Concepts (PoCs) when running Ant Media Server on Amazon EC2, Graviton, GPU, ECS, or EKS. Ant Media Server has passed APN’s AWS Foundational Technical Review–an AWS-defined review built to qualify an ISV (Independent Software Vendor) as a validated software product, and customers can easily subscribe and deploy Ant Media Server from the AWS Marketplace.

AWS Customers that want to push the boundaries and start streaming at the speed of now deploy Ant Media Server on Amazon EC2, Graviton, GPU, ECS, or EKS to innovate and exceed modern digital expectations with Ultra-Low Latency and support for WebRTC, LL-DASH(CMAF), HLS, RTMP, SRT, RTSP, and Zixi. Ant Media Server offers the most purpose-built capabilities of any cloud, making it the ideal solution for real-time streaming.