What is WebRTC Scalability?

WebRTC scalability is the ability of your system to handle more users, video sessions, or streaming load without sacrificing quality or latency. WebRTC is designed for real-time communication — and scaling real-time infrastructure requires a different mindset than scaling on-demand video like YouTube or Netflix.

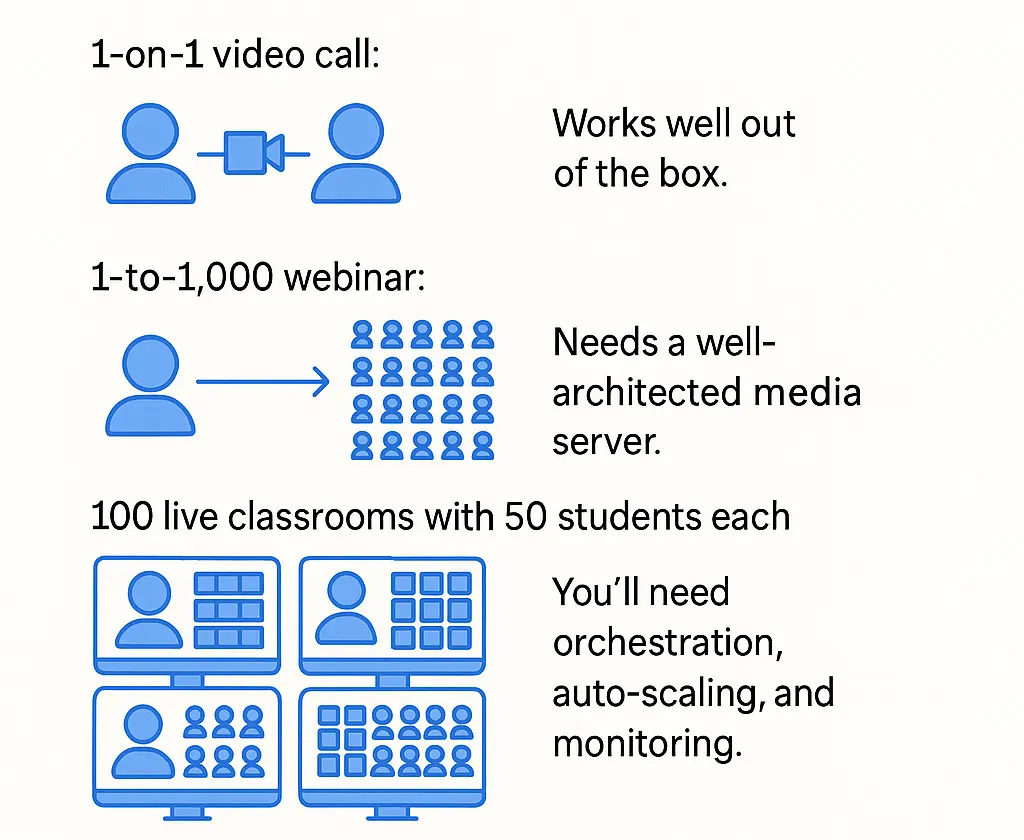

For example:

- 1-on-1 video call? Works well out of the box.

- 1-to-1,000 webinar? Needs a well-architected media server.

- 100 live classrooms with 50 students each? You’ll need orchestration, auto-scaling, and monitoring.

Scalability isn’t just about performance — it’s about planning ahead so that your system doesn’t break under pressure.

If you’re new to WebRTC or want a deeper technical dive, check out our complete guide on how WebRTC works.

Table of Contents

Why WebRTC is hard to Scale

WebRTC was built for direct peer-to-peer communication, not for massive broadcast at scale. While that makes it lightning fast, it introduces technical challenges.

Challenges with Scaling WebRTC:

- Every connection is two-way (bi-directional), meaning bandwidth grows exponentially.

- No CDN support: Unlike HLS or MPEG-DASH, WebRTC can’t be cached at the edge.

- Each connection eats CPU: The more participants, the more processing is needed.

- High sensitivity to network quality: Packet loss, jitter, or latency can break streams

Example – In a peer-to-peer (mesh) video call with 6 participants, each user has to manage 5 separate video streams — both upload and download. That’s 30 total streams for 6 people!

Without smart routing (like SFU), things fall apart fast.

Different Architectures: Mesh, SFU, MCU

There are different core routing strategies used in WebRTC, like:

1. Mesh

- Every user connects directly to every other user.

- Simple but non-scalable.

- Maxes out quickly due to browser and bandwidth limitations.

2. SFU (Selective Forwarding Unit)

- Each user sends one stream to the server.

- The server intelligently forwards streams to other participants.

- Efficient and scalable for 1-to-many or many-to-many scenarios.

3. MCU (Multipoint Control Unit)

- The server decodes and mixes all streams, then sends one mixed stream.

- High CPU use, but ideal for recording or legacy support.

SFU is the sweet spot — scalable, cost-efficient, and the default architecture for Ant Media’s real-time workflows.

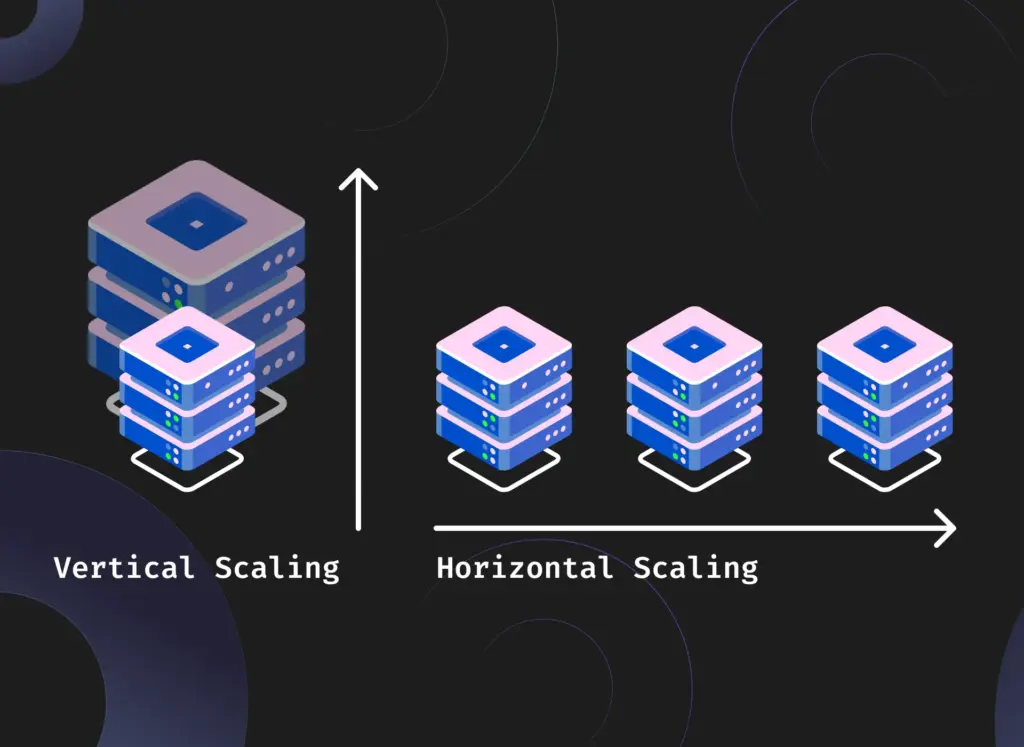

Scaling WebRTC: Vertical vs Horizontal

Vertical Scaling

- Add more resources (CPU, memory, bandwidth) to your server.

- Simple to start, but limited.

- Great for development, testing, and small production loads.

Horizontal Scaling

- Distribute traffic across multiple servers.

- Involves clustering, load balancing, and orchestration.

- Can support tens of thousands of concurrent users.

Ant Media Server supports both vertical and horizontal scaling — you can start with one node and scale up or out based on demand.

How Ant Media Server Tackles WebRTC Scalability

Ant Media Server offers built-in features to help you scale WebRTC applications from tens to tens of thousands of users.

- SFU-based architecture for efficient stream routing.

- Clustered deployments for load distribution.

- Auto-scaling on cloud platforms (AWS, Azure, GCP).

- Kubernetes and Helm chart support for containerized orchestration.

Ant Media doesn’t just give you the building blocks — it gives you ready-made tools to scale without reinventing the wheel.

Clustering in Ant Media Server

Ant Media supports clustering with both manual and cloud-based configurations. A basic cluster includes:

- Origin node: Accepts published streams. These nodes perform various tasks such as transcoding (converting streams to different formats or bitrates) and transmuxing (changing the container format of the stream).

- Edge nodes: Deliver streams to viewers. Unlike origin nodes, edge nodes do not ingest streams or perform tasks such as transcoding or transmuxing. Their sole purpose is to fetch the stream from an origin node and forward it to the viewers, ensuring efficient distribution of content.

- Load balancer: Distributes load to the appropriate edge. The load balancer acts as the entry point for both viewers and publishers. It receives user requests and intelligently directs them to an appropriate node in either the origin or edge group, based on the current load and availability of resources.

- Central Database: The database is central to the AMS cluster, storing all stream-related information. This data includes bitrates, settings, the origin node of the stream, and additional metadata necessary for stream management.

You can build WebRTC clusters either on-premises or in the cloud using Ant Media Server. For Kubernetes users, a ready-to-use Helm chart simplifies deployment.

Cluster Setup Guide – Learn how to configure, deploy, and scale your streaming infrastructure efficiently using Ant Media’s clustering architecture.

Load Balancing Strategies

Ant Media Server integrates with:

- NGINX: Fast and easy to configure.

- HAProxy: More flexible routing rules.

- Cloud Load Balancers: Use region or latency-based logic.

Each strategy supports intelligent stream distribution for high availability.

For region-based WebRTC scalability, deploy clusters in different zones and let your load balancer route users to the nearest one.

Autoscaling in Cloud Environments

Need to scale automatically based on user load or CPU usage?

Ant Media offers plug-and-play auto-scaling templates for:

| Platform | Template Docs |

|---|---|

| Amazon Web Services (AWS) | CloudFormation Template |

| Microsoft Azure | ARM Template |

| Google Cloud Platform (GCP) | Jinja Template |

These templates monitor load and dynamically add/remove nodes as needed — helping your WebRTC Scalability to scale efficiently and cost-effectively.

WebRTC Scalability Scenarios

| Scenario | Scalability Challenge | Scaling Solution |

|---|---|---|

| 1-to-many webinars (e.g., 1 host, 10k viewers) | SFU must handle thousands of downstream video streams | SFU architecture + Edge servers + Auto-Scaling (Cloud) |

| Multi-classroom e-learning (100 rooms, 40 students each) | High concurrency across separate sessions | Cluster mode + Load balancing by app/session |

| Global live streaming | Delivering low-latency video to worldwide audiences | Multi-region clusters + Geo-based load balancing |

| User-generated live rooms (e.g., virtual events) | Unpredictable spikes in traffic per room | Kubernetes + Auto-scaling with Helm |

| Call centers or remote support | 100s of 1:1 WebRTC calls concurrently | SFU + Horizontal scaling (multiple Origin nodes) |

| Multilingual live streaming | Same stream to be served with multiple audio tracks | Multiple streams + Separate apps + Clustered Edge routing |

| Hybrid live + RTMP broadcast | WebRTC for real-time, RTMP for scale, or CDN broadcast | Simultaneous WebRTC + RTMP Output from SFU |

Scaling Toolkit for WebRTC with Ant Media Server

| Capability | Description | How to Use |

|---|---|---|

| SFU Architecture | Efficient forwarding of streams to 1000s of viewers | Built-in, used by default |

| Cluster Mode | Connect multiple AMS nodes for horizontal scalability | Cluster Setup Guide |

| Load Balancer Integration | Distributes traffic among nodes (region-based, round robin, sticky sessions) | Supports NGINX, HAProxy, cloud LBs |

| Auto-Scaling Templates | Add/remove instances based on CPU or traffic load | AWS, Azure, GCP templates available |

| Kubernetes Deployment | Scalable orchestration using containers and Helm charts | Helm Chart Guide |

| Region-Aware Scaling | Deploy clusters across geographies for global audiences | Pair cloud LBs with multiple clusters |

| Monitoring and Metrics | Track stream load, CPU usage, sessions per node | Track stream load, CPU usage, and sessions per node |

| RTMP Output + Recording | Scale by outputting to social platforms or CDNs for broadcast | Available via Web UI or REST API |

Conclusion: Build Small, Think Big

WebRTC scalability used to be hard. With Ant Media Server, it’s now simple, affordable, and ready to go.

Whether you’re streaming a 10-person classroom or a global event with 100,000 attendees — Ant Media helps you grow without friction.

Start lean. Scale when needed. Pay only for what you use.

FAQs on WebRTC Scalability

How many viewers can a single Ant Media Server handle?

It depends on the server specifications (CPU, RAM), and the stream’s bitrate and resolution. For example, a 16-core CPU optimized instance can handle up to 800 WebRTC viewers.

Can I use Ant Media Server with Kubernetes?

Yes, Ant Media Server can be scaled with Kubernetes and services like AWS EKS, Azure AKS, and GCP GKE. It can also be deployed with Helm chart.

Is autoscaling available out of the box?

Yes. Prebuilt templates for AWS, Azure, and GCP make it easy to auto-scale based on load.

Do I need to be a developer to deploy this?

Not necessarily. Many configurations are accessible via UI or prebuilt scripts.

Estimate Your Streaming Costs

Use our free Cost Calculator to find out how much you can save with Ant Media Server based on your usage.

Estimate Your Streaming Costs

Use our free Cost Calculator to find out how much you can save with Ant Media Server based on your usage.

Open Cost Calculator