Ant Media’s Flutter WebRTC SDK helps you with building your Flutter application that can publish and play WebRTC broadcasts with just a few lines of code. It is very easy to use and integrate. Moreover, Ant Media does not charge extra for Flutter WebRTC SDK and other SDKs. If there’s anything else we can do for our developer friends, please let us know.

This document explains how to configure Flutter WebRTC SDK and run the sample applications.

Pre-requisite for Flutter WebRTC app development

Software requirements

- Android Studio (For Android)

- XCode (For iOS)

Please note, you can also run the projects through the terminal or command-line, however, for better debugging, consider using Android Studio or XCode. This document considers the use of Android Studio for building and running applications.

How to set up your first application on Flutter

- Install Flutter: Please follow the installation steps mentioned here to install Flutter.

- Install the Android Studio: Please follow the installation steps mentioned here to install Android Studio.

- Add the Dart language plugins and Flutter extensions to Android Studio. Please follow the below operating system-specific installation instructions.

- For macOS, use the following instructions:

- Start Android Studio

- Open plugin preferences (Preferences > Plugins as of v3.6.3.0 or later)

- Select the Flutter plugin and click Install

- Click Yes when prompted to install the Dart plugin

- Click Restart when prompted

- For Linux or Windows, use the following instructions:

- Start Android Studio

- Open plugin preferences (File > Settings > Plugins)

- Select Marketplace, select the Flutter plugin, and click Install

- Click Yes when prompted to install the Dart plugin

- Click Restart when prompted

- For macOS, use the following instructions:

- To verify the Flutter installation, please create a sample app and build it by following the instructions provided here to create a Flutter project.

Download and run the WebRTC sample projects

Download the sample Flutter WebRTC projects

- Clone or download the Flutter SDK code from the Flutter SDK Github repository.

Configuration of the sample project

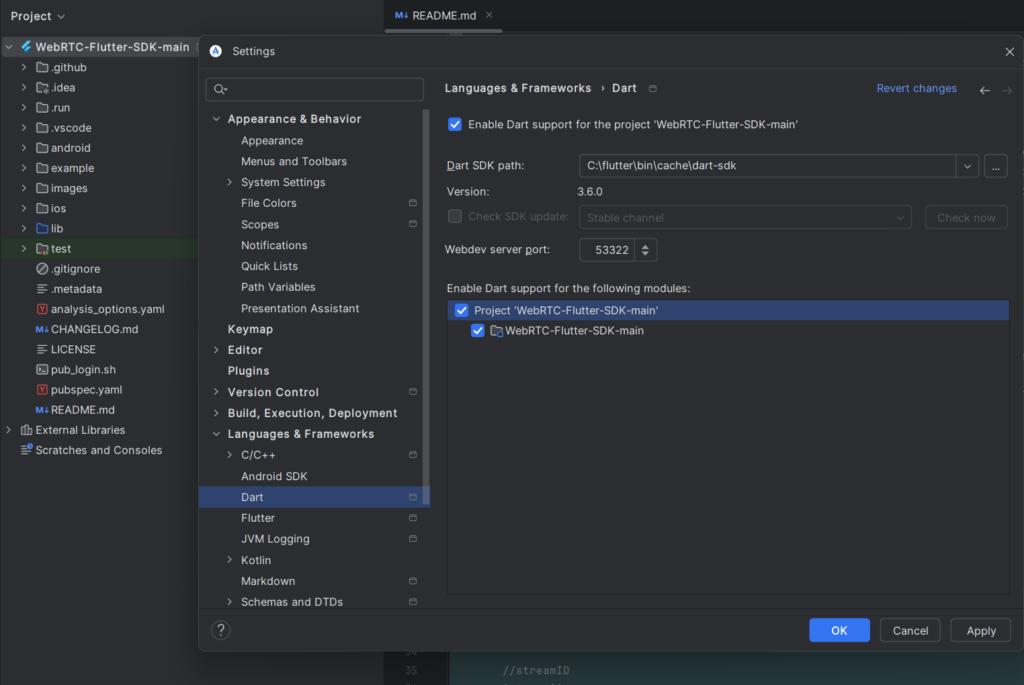

Open Flutter WebRTC SDK in Android studio, and make sure you have installed the Flutter and Dart plugins.

Make sure that the paths of Flutter and Dart SDK are correctly configured in Android Studio.

File > Settings > Languages & Frameworks

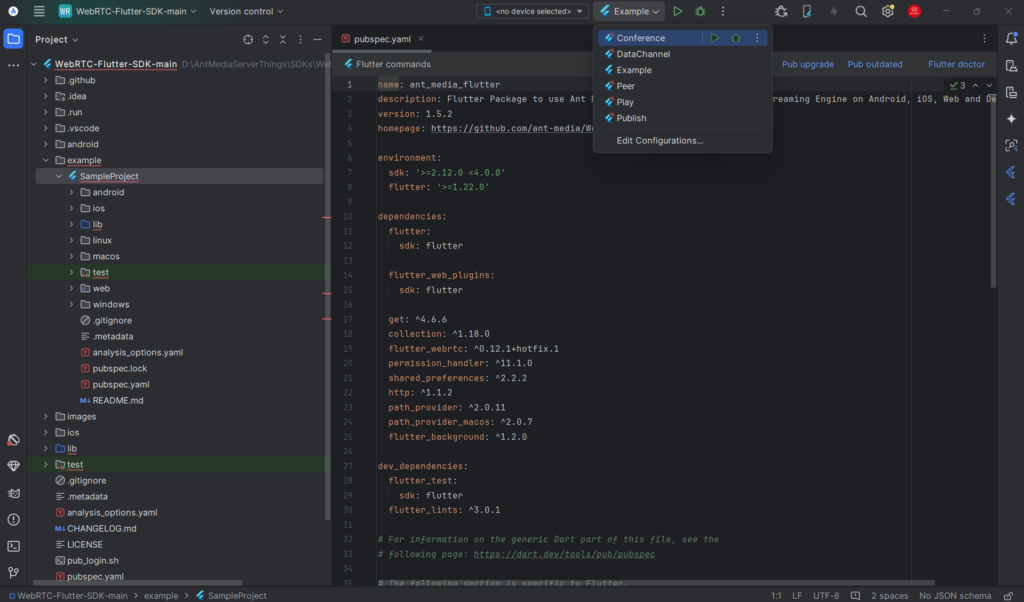

If these plugins have been installed and the locations of Flutter and Dart SDK are configured correctly, then the options to run the samples will appear automatically after source code indexing. Please refer to the screenshot below.

Install dependencies and run sample project

In the project navigator, you will find a folder named examples. In the example folder, there is a SampleProject that uses the ant_media_flutter dependency with all options (Publish, Play, P2P, Conference, and DataChannel) to test.

In the same examples folder, there are separate projects to test Publish, Play, Peer, Conference, and DataChannel individually.

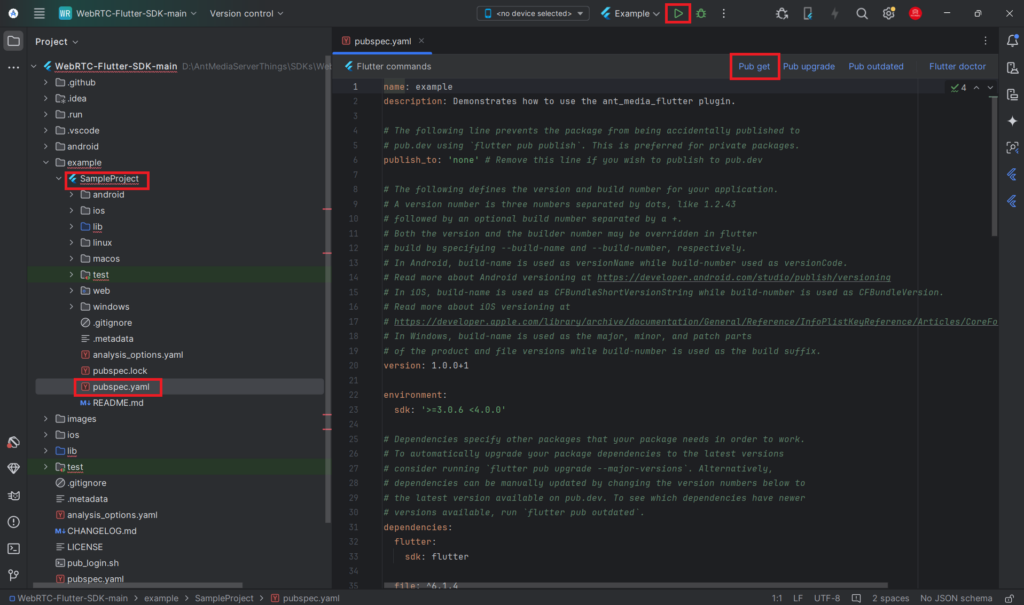

All projects use Ant Media Flutter dependency, which is added to the pubspec.yaml file.

Click on the Pub get button to install the dependency in the project. The pub get button appears only when pubspec.yaml file is opened in the editor.

Run the sample Flutter WebRTC apps

To run the sample apps on Android, you need to connect the Android device to your workstation. For that, make sure you have enabled the developer options and USB debugging on your device. On Android 4.1 and lower, the Developer Options screen is available by default. To get the developer options screen on Android 4.2 and higher, follow the below steps:

- Open the Settings app

- Select System

- Select About phone

- Scroll to the build number and tap it 7 times

- Return to the previous screen to find Developer options near the bottom

- Scroll down and enable USB debugging

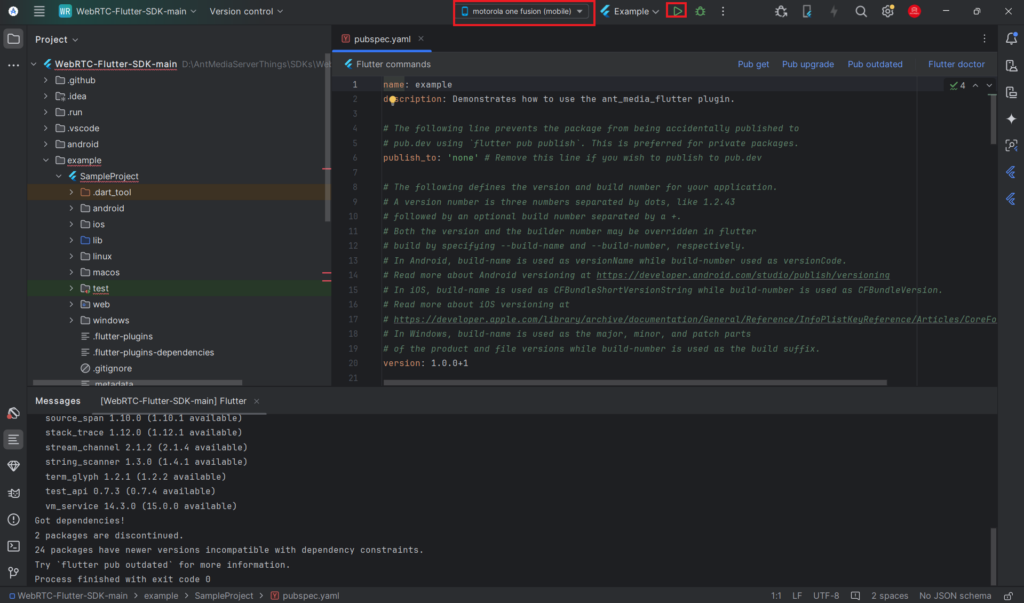

If USB debugging is enabled on your device, then your device name will automatically be available in the list of devices.

Just select the device, select the sample project from the target list, and click on the run button. The Gradle task will start and wait for some time until the app builds. After the building is complete, a confirmation pop-up will come to your device for installation.

Please follow the below instructions for running specific sample apps

Running Publish Sample App

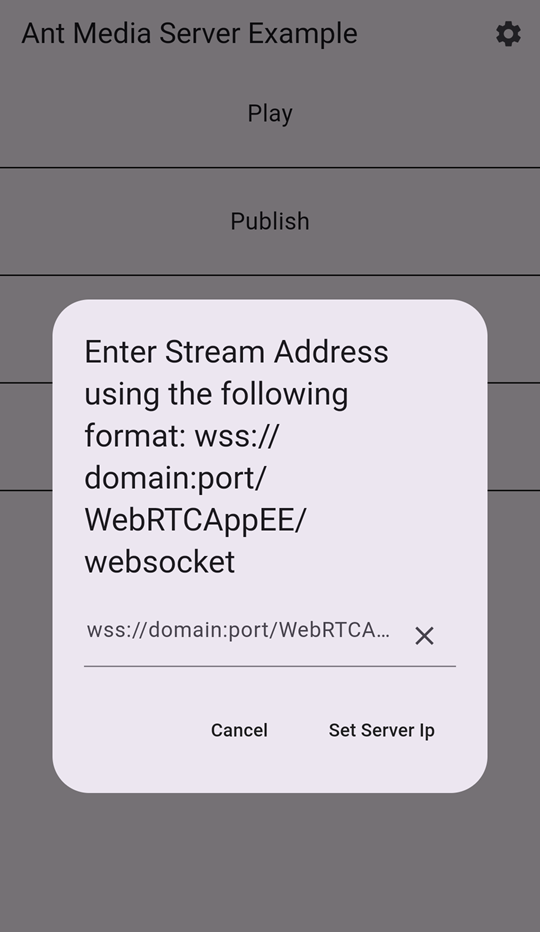

Select the Publish app from the target list and click the Run button. Once the app is running, enter the server IP address. For entering the IP address, please follow the below steps.

- Tap on the Settings icon in the top right corner of the application.

- Enter the Server IP as wss://ant_media_server_address:port/WebRTCAppEE/websocket

- Tap the ‘Set Server Ip’ button.

Publish WebRTC stream.

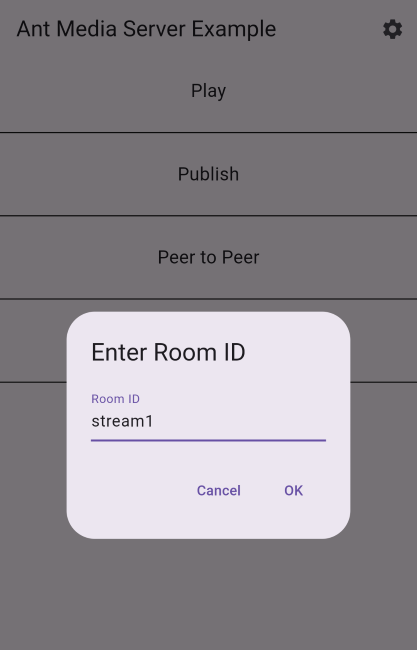

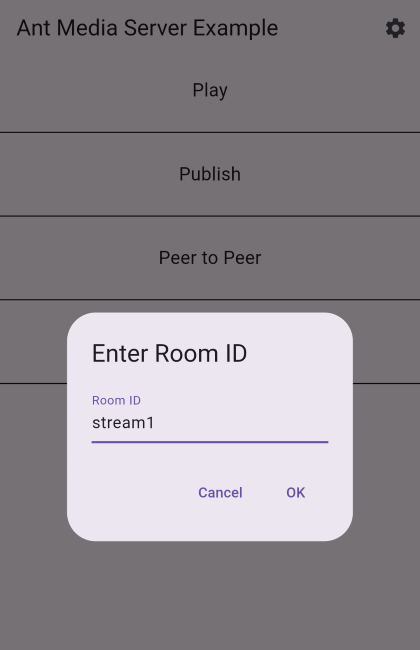

- Select the Publish option from the list & enter the streamId.

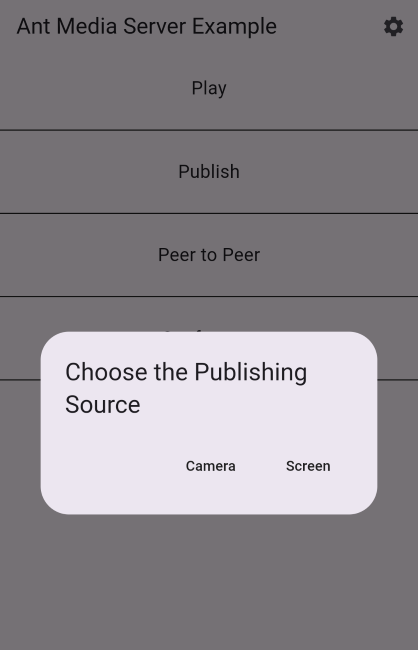

- Choose the publishing source. Please note that for iOS, the app screen recording option records the app’s UI only, while the Android app records the complete device screen.

- The WebRTC publish will be started. You can switch between the front & back cameras as well.

- To verify whether the stream was published successfully, open the web panel of your Ant Media Server (e.g., http://server_ip:5080) and view the stream there.

- You can also quickly play the stream via an embedded player. For more details, check this document.

Running Play Sample App

- Set the Server Address: Set the Ant Media Server Address as we did for the publish sample.

- Play the WebRTC stream:

– Before playing, make sure that there is a stream that is already publishing to the server with the same streamId that you want to play.

– Select the Play option from the list & enter the streamId. - The WebRTC stream will start to play.

WebRTC Flutter SDK Samples:

There are other flutter SDK samples that you can explore & use as a reference. To check more samples, visit the WebRTC Flutter SDK samples.

Using WebRTC Flutter SDK

Before moving forward with using WebRTC Flutter SDK, we highly recommend using the sample projects to get started with your application. It’s good to know the dependencies and how it works in general.

Install ant_media_flutter package from the pub.dev

YAML

Initialize imports and request permission from Flutter-SDK

Dart

Set stream Id and server URL

The method below is used to publish a stream.

Dart

How to use the SDK.

There is a common function used in ant_media_flutter.dart to achieve the functionalities of the Publish, Play, Peer, Conference, and DataChannel modules. In this function, we can pass the streamId, server address, roomId, type of calling, and all the callback functions, which are described below as parameters. The method below is used as the common function.

Dart

Here is a small description of all parameters

1. Ip: ip is the WebRTC server address that we want to use in our SDK, the format of this server is as follows.

2. streamId: The streamid is ID of the stream that we want to use.

3. roomId: The roomId is the Id of the room in which we want to join our stream. In the case of publishing, the roomId should be passed as a token.

4. type: The type is AntMediaType enum; there are 6 cases in this type.

- Undefined: This is a default type.

- Publish: When we want to publish a stream or want to test data channel examples, we have to pass this type as AntMediaType.Publish.

- Play: When we want to play a stream, we have to pass this type as AntMediaType.Play.

- Peer: When we want to start a peer-to-peer connection, we have to pass this type as AntMediaType.Peer.

- Conference: When we want to start a conference, we have to pass this type as AntMediaType.Conference.

5. UserScreen: This function is used to change the type of publishing source when true screen recording will be published and when it is false, camera recording will be published.

6. forDataChannel: This is a bool type, and this is specifically used in the case of publishing the WebRTC stream without using any recording option to achieve the data channel functionality. If we keep this value true, SDK will not publish any type of recording. The initialization of the data channel will not be affected by this property. data channel will be initialized in all cases (Publish, play, Peer, Conference). Only the publishing of recordings will be affected by this value.

7. OnStateChange: this is a function that uses one parameter of the HelperState type and returns nothing. HelperState type has these subtypes

- CallStateNew: This type of HelperState function is called when the call is started. We can write the change in the UI code when a call has been started.

- CallStateBye: This HelperState function is called when the peer connection has been closed, or we can say when the call has been finished.

- ConnectionOpen: This HelperState function is called when the web socket has been connected and opened.

- ConnectionClosed: This HelperState function is called when the web socket has been disconnected and closed.

- ConnectionError: This HelperState function is called when there is an error in making a peer connection.

8. onLocalStream: This function is a nonreturn type function that has a MediaStream type parameter. The parameter is a local stream that occurred by our device’s camera or screen recording. We can use this stream to see what we are publishing to the server.

9. onAddRemoteStream: This function is a nonreturn type function that has a MediaStream type parameter. The parameter is a remote stream caused by a connected peer’s device’s camera or screen recording. We can use this stream to see what we are getting from the server.

10. onDataChannel: This function is a no-return type function and uses the RTCDataChannel type parameter, which refers to the data channel initialized.

11. onDataChannelMessage: This function is a no-return type that uses three parameters

- dc: this is the data channel by which the message is sent or received.

- message: this is the RTCDataChannelMessage type, which is the message that we have received or sent.

- isReceived: This is a bool type. If this is true, a message is received. If false, then a message is sent.

12. onUpdateConferencePerson: This is a no-return type function, and it is used in the case of AntMediaType. Conference-type connection. When the user joins the room and is in the room, other streams are added or removed, so this function will be called. It uses a dynamic type function, which is an array of stream IDs that are joined in the room.

13. onRemoveRemoteStream: This is a no-return function that is called when any remote streams have been removed. It uses a MediaStream type parameter, which is the stream that has been removed. This function is used to add the code to go back. when the call has been disconnected.