In this blog post, I will tell 3 different video conferencing solutions in Ant Media Server: Stream Based SFU, Track Based SFU, and MCU. Sometimes they may be confusing also for me, so I decided to write this blog post. I will try to keep this blog post as simple as I can. Let’s start with the most mature one.

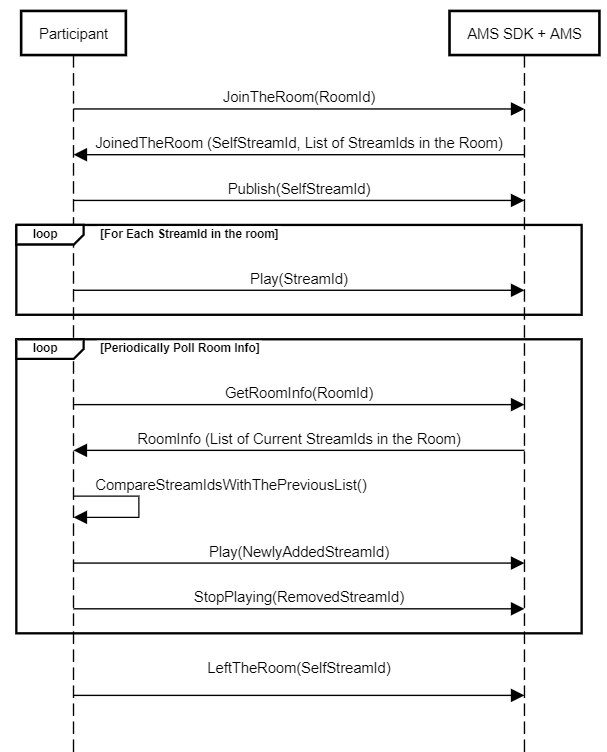

Stream-Based SFU Video Conference

This is the first implemented solution in AMS. It works with WebRTC streams directly. Here is the use case for this type of conference:

- When a participant joins a room, he sends a join request to AMS for the room.

- AMS responds with the stream id for the participant and the list of the other participants’ stream ids in the room.

- He starts to publish a WebRTC stream with his stream id.

- He sends WebRTC play request for each stream ids he got one by one.

- To be informed for newly joined/left participants he should get room info (which contains the stream ids of the room) periodically and compare the returning current stream ids with the previous list he has.

- He sends play request for new stream ids, and stop request for removed ids.

- The calling leave method is enough to leave the room. (no need to send a stop request for each id)

Stream-Based SFU Video Conference Flow

Stream-Based SFU Video Conference solution works in clustered mode. But publish and all play request sent by a participant is forwarded to the same node in the cluster. So no need to make the origin-edge distinction in such a cluster.

Stream Based SFU Video Conference solution is implemented in JS, Android, iOS, Flutter and React Native SDKs. Also, there are sample projects for all SDKs. For JS SDK, you can check conference.html sample page.

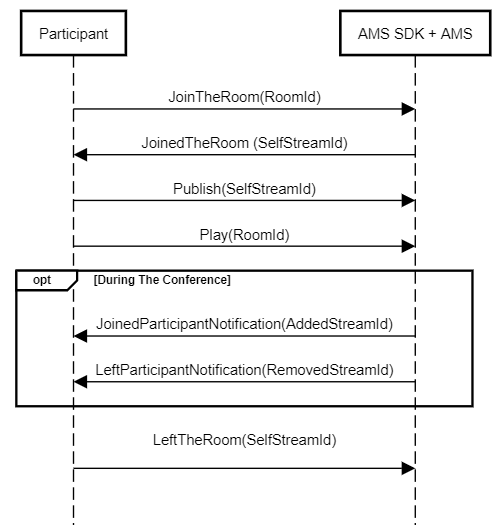

Track-Based SFU Video Conference

It works with WebRTC tracks instead of streams. you can find more about multitrack streaming in this blog post. Here is the use case for this type of conference:

- When a participant joins a room, he sends a join request to AMS for the room.

- AMS responds with the stream id for the participant.

- He starts to publish a WebRTC stream with his stream id.

- He sends only one WebRTC play request for the room id.

- AMS sends the notification for the newly joined/left participants. (Used for UI)

- The calling leave method is enough to leave the room.

Track-Based SFU Video Conference Flow

Track-Based SFU Video Conference solution works in clustered mode similarly to Stream-Based SFU Conference solution. Publish and play request sent by a participant is forwarded to the same node in the cluster. So no need to make the origin-edge distinction in such a cluster.

Advantages of Track-Based SFU Video Conference

Track Based SFU Video Conference has the following advantages:

- Easier to implement; no need to poll room info, send play once

- You can enable/disable video/audio track for a participant stream you play (provides resource usage advantage on client side)

- Reduces resource usage on both the server side and client side if you implement as compatible with the optimizations introduced in this blog post.

For now, it is only implemented for JS SDK for now. You can check multitrack-conference.html sample page to understand how to integrate with JS SDK.

The Improved Track-Based SFU Video Conference

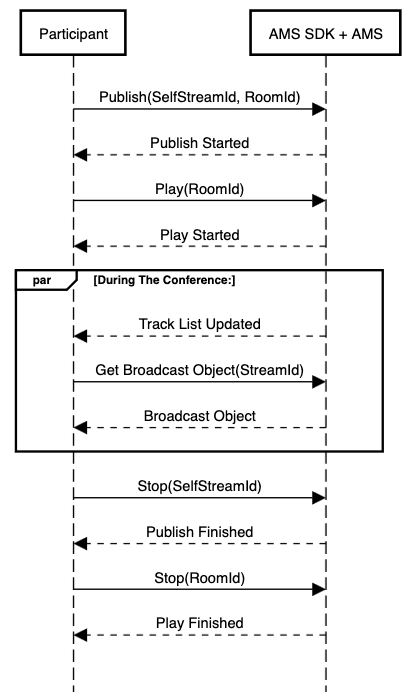

This is a recent conference solution in AMS. It works with WebRTC tracks instead of streams. you can find more about multitrack streaming in this blog post. Here is the use case for this type of conference:

- To be able to join the room, send publish request with your stream id and room name to the server

- Send play request to the room id.

- AMS responds with the publish started and play started callbacks.

- AMS sends the track list updated callback for the let you know newly joined/left participants. (Used for UI)

- You can call the get broadcast object method to retrieve the Broadcast object as JSON. If you pass the room name, you can check the substrack list. If you pass the participant’s stream id you can check the metadata field which can be used as storing screen share, microphone, or camera status.

- To be able to leave from the conference room, call stop function for your stream id and room name separately.

The Omproved Track-Based SFU Video Conference solution works in clustered mode similarly to Stream-Based SFU Conference solution. Publish and play request sent by a participant is forwarded to the same node in the cluster. So no need to make the origin-edge distinction in such a cluster.

Advantages of The Improved Track-Based SFU Video Conference

The Improved Track-Based SFU Video Conference has the following advantages:

- Easier to implement; no need to poll room info, send play once

- You can enable/disable video/audio track for a participant stream you play (provides resource usage advantage on client side)

- Reduces resource usage on both the server side and client side if you implement as compatible with the optimizations introduced in this blog post.

- No need to call join/leave room functions, it’s much more simplified.

- Calling the broadcast object opens the new opportunities for using metadata field to sync additional information per participant.

For now, it is implemented for JS SDK, Flutter SDK, iOS SDK, and Android SDK for now. If you have Ant Media Server EE +2.8.0, you can check the conference.html sample page to understand how to integrate with JS SDK. For a full video conference UI project that is compatible with the optimizations check this project.

MCU Video Conference

In an MCU-type Video conference, you will get only one output stream for a room. This stream contains the merged video and mixed audio. The drawback of this type of conference is that you hear your own audio in the mixed audio. So generally MCU is used in addition to other video conference solutions to get a single stream to record or share the players(not participants) for the room.

You can enable MCU besides Stream Based Video Conference by sending a join request with mode="mcu" parameter. You can check conference.html sample page for JS SDK. You can check this blogpost for details.

Also, you can check the MCU video conference sample page mcu.html if you prefer. In order to stop hearing of own audio, you may customize this page by playing the merged video but not mixed audio. Instead of mixed audio, you can play audio streams for each participant individually.

MCU Conference

MCU also works in cluster mod after v2.5.1 but it is a bit tricky. MCU requires all streams to be in the same node. But this is against the cluster mode logic. So to use MCU in cluster mode, you should keep a separate node to collect all streams from the nodes and run the MCU process. So this server should have high resource (CPU, memory) specs. In cluster mode, instead of enabling MCU with a join request (as told above), you should enable MCU by sending this REST method to the node kept for MCU processing.

Conclusion

You have different alternatives to create a video conference solution in AMS. I have tried to explain how they work without diving into code details. But I have also provided links for relevant references to the developers.

In addition to those explained above, there are some other topics for video conferences like REST methods for room management, security in conference, viewer-only mode, recording the room, adding RTMP stream into a room etc. We will discuss those in another blog post.