With the release of version 2.0, Ant Media Server Enterprise Edition supports WebRTC Data Channel standard and previously we posted 3 blog posts related to setup, usage and implementation of Data Channels with Ant Media Server EE:

- How to Exchange Data Easily Using WebRTC Data Channels with Ant Media Server

- WebRTC Chat and File Transfer Done Easily with Ant Media Server – Part 1

- WebRTC Chat and File Transfer Done Easily with Ant Media Server – Part 2

Our last blog post on this topic will be about sending control messages like actions and events through WebRTC Data Channels and about best practices when creating more interactive and complex applications through the usage of these. We will examine 2 use case scenarios with some example usages from our Javascript and Android SDKs and try to make them more understandable for our customers.

Sending all kinds of events or actions through data channels is the most convenient and secure way to implement interactive applications with WebRTC. Low latency and secure communication through Data Channels can be useful, especially in the case of interactive applications like conferences, e-learning, or in some games where each participant has a certain role and is allowed to do certain types of actions and can trigger certain events.

For example in an e-learning application, students can raise their hand to ask a question and the teacher can decide to accept this request or reject it. If the request is accepted, the teacher and the other students will start playing the video stream of that student.

A similar scenario can also be realized for video conference applications of companies where the manager can allow employees to speak if requested during a presentation. Similarly voting on a subject during conferences or e-learning, communicating certain events like muted microphones, alerting or poking other users or sending game commands and events during WebRTC games can all be easily implemented through Data Channels.

Message Format and Protocol

As mentioned in our blog related to chat application, we recommend sending events or user actions as structured data in JSON or XML. For most of the applications parsing them with common JSON or XML parsers on the receiver side would be enough but for complex applications having a validation schema like XML Schema or JSON Schema for these events can be useful and less error-prone when validating, parsing, mapping them.

An example event in JSON format which is sent through a data channel to all conference participants on our conference.html page when someone mutes his microphone looks like this:

{

"streamId": "stream1",

"eventType": "MIC_MUTED"

}

For more complex applications some kind of communication protocol is needed to manage the possible interactions between different users or the system. When designing such protocols for interactive and reactive applications, it is very important to clearly specify and examine what actions and events a user with a certain role can generate and which other states these events can lead to. In such cases, UML Sequence diagrams can be helpful to document and design the communication scenarios and UML State diagrams can help to handle all edge and use cases that are dependent on different states and roles. Next, we will talk about simple use case-related conferences.

Use Case: WebRTC Video Conference Events

In the latest version of our conference.html and ConferenceActivity in our WebRTC Android SDK, when a conference attendee mutes or unmutes his microphone or enables or disables his video stream for publishing, a special event message like in section above is sent to the all the other conference participants, through WebRTC Data Channel. Then all other stream participants parse the received event and based on the event type the developer can customize the conference.html to notify the users or show an icon on the corresponding streams.

In Web side when the event receives the “data_received” signal in callback function is called:

... else if(info == "data_received") { handleNotificationEvent(obj); }

which then handles different notification events based on the event type:

function handleNotificationEvent(obj) { console.log("Received data : ", obj.event.data); var notificationEvent = JSON.parse(obj.event.data); if(notificationEvent != null && typeof(notificationEvent) == "object") { var eventStreamId = notificationEvent.streamId; var eventTyp = notificationEvent.eventType; if(eventTyp == "CAM_TURNED_OFF") { console.log("Camera turned off for : ", eventStreamId); } else if (eventTyp == "CAM_TURNED_ON") { console.log("Camera turned on for : ", eventStreamId); } else if (eventTyp == "MIC_MUTED") { console.log("Microphone muted for : ", eventStreamId); } else if (eventTyp == "MIC_UNMUTED") { console.log("Microphone unmuted for : ", eventStreamId); } } }

In this case for simplicity, it only logs them to the console. In Android in the ConferenceActivity onMessage method the notification events are handled analogously with Toast messages:

public void onMessage(DataChannel.Buffer buffer, String dataChannelLabel) { ByteBuffer data = buffer.data; String strDataJson = new String(data.array(), StandardCharsets.UTF_8); try { JSONObject json = new JSONObject(strDataJson); String eventType = json.getString("eventType"); String streamId = json.getString("streamId"); Toast.makeText(this, eventType + " : " + streamId, Toast.LENGTH_LONG).show(); } catch (Exception e) { Log.e(getClass().getSimpleName(), e.getMessage()); } }

Please check our conference.html page in Web and ConferenceActivity in our Android WebRTC SDK to better understand how these messages are produced and handled.

Use Case: Raising Hand in E-Learning

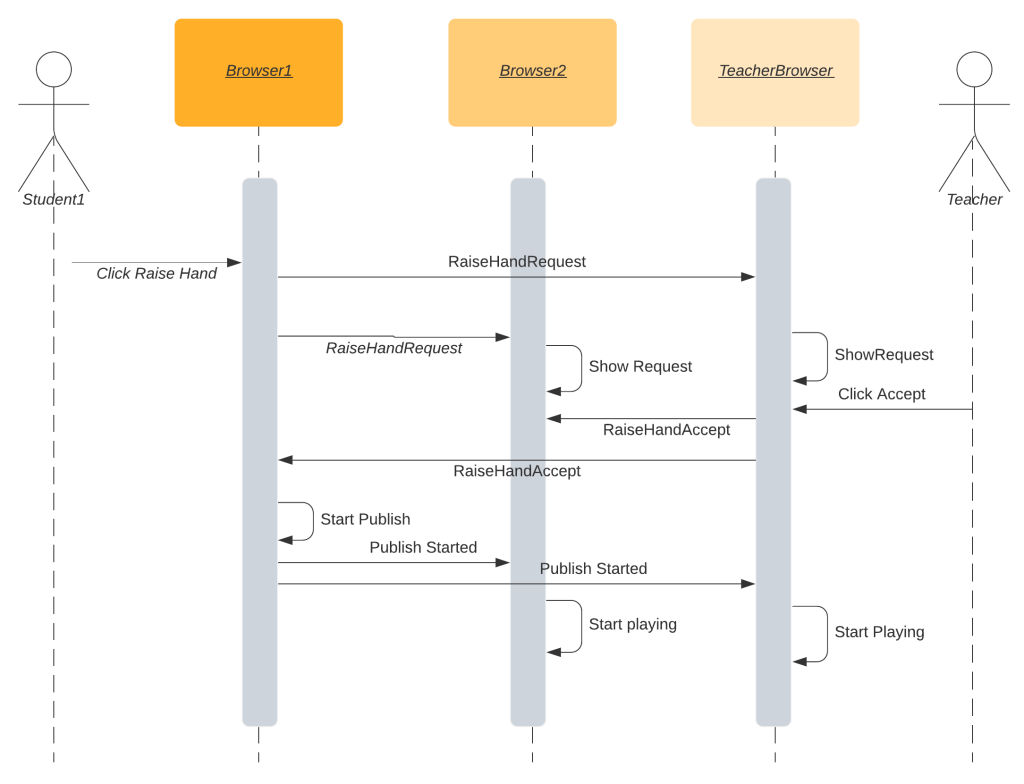

A more complex case would be a student raising hand to ask a question in an E-learning application.

In our hypothetical scenario, multiple students can send raise hand messages approximately in the same time through WebRTC Data Channel and after that the teacher should decide to accept one of their requests or reject their requests. So the answer of the teacher should reference the unique stream id of the sender of the RaiseHandRequest which he accepted or rejected. If he accepts a request he will send accept message to everybody and the student whose request is accepted will automatically start publishing his video stream and the others will play it.

UML Sequence Diagram of Raise Hand *

We are not showing here how to implement different roles for the students and teacher but one way to achieve this is for example having different customized versions of conference.html and ConferenceActivity for teacher and students. We can add also following constraint to our scenario; while a student is asking a question, no other student can request to ask any questions. To implement this constraint the state information can be stored in each participants with following possible states:

- Teaching

- Question_Session_Empty

- Question_Session_Full

So the students can only ask questions when state is Question_Session_Empty and when state is Question_Session_Full, nobody can raise hand to ask questions. In our scenario the coordinator of the state will be the teacher and in edge case where a student is temporarily disconnected and reconnects, the current state should be sent to him by the teacher. (Another option is teacher sending the state regularly as some kind of keep alive message.)

In this scenario an example RaiseHandRequest request in JSON format would look like this:

{ "senderId":"student1", "eventType":"RaiseHandRequest", "eventTime": 1592602900, "description":"Question Related to Lesson 1" }

And a positive answer to his request from the teacher would look like this:

{ "senderId":"teacher1", "eventType":"RaiseHandAccept", "eventTime": 1592602986, "answerToRequest": "student1", "description":"You have only 1 minute to ask" }

After receiving this, the student1 would start publishing his video stream and the others would start playing it.

In this blog post, we tried to inform our customers about some possible example use cases and the best practices when implementing interactive applications with WebRTC Data Channels. Here we talked about relatively simple use cases but an exciting variety of interactive and complex projects can be implemented using WebRTC Data Channels and Ant Media Server SDKs. We want to guide the developers when they are implementing their amazing and feature-rich projects using our SDKs.

Please be advised the methods and design decisions described here are not complete and not the only way to implement this kind of solution. The design best suited to your needs can be a different one based on your requirements.

conference.html and ConferenceActivity are included in our sample projects and WebRTC Data Channels are available to our customers with our Web, Android and IOS SDKs in version 2.0.

If you have any questions, please drop a line to contact@antmedia.io.

Notes:

- In UML Sequence Diagram of Raise Hand, the visualized communication is simplified to make it more clear. Normally every message that is exchanged between the class participants will go through Ant Media Server EE because the underlying streams will be in publish and play mode.

References:

- First image taken from: https://www.chronicle.com/interactives/20190719_inclusive_teachinghttps://healthitsecurity.com/features/how-healthcare-secure-texting-messaging-impact-the-industry