As communication technology develops, live streaming becomes more and more common on the web. Live Streaming has come a long way from having minutes of latency to ultra-low latency thanks to WebRTC.

Anyone who is interested in live streaming, especially real-time streaming, has definitely heard of it. There are also WebRTC servers and they are just as important as WebRTC.

In this blog post, you will get detailed information about them., Let’s start diving into the world of real-time communication by answering the question, what is WebRTC?!

What is WebRTC?

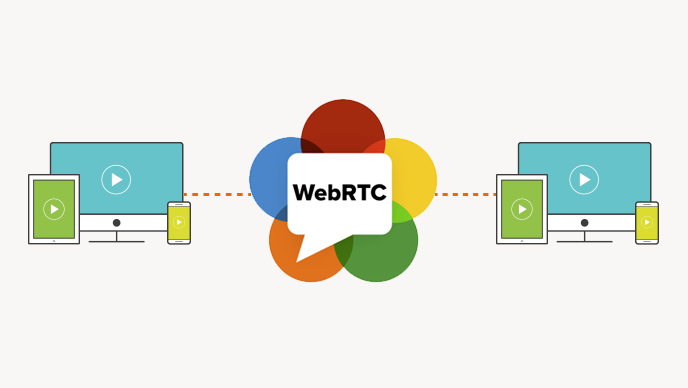

WebRTC stands for web real-time communications. It is a very exciting, powerful, and highly disruptive cutting-edge technology and streaming protocol.

WebRTC is HTML5 compatible and you can use it to add real-time media communications directly between browser and devices. One of the main advantages of using WebRTC is that it does not require any browser plugins as a prerequisite.

Webrtc is progressively becoming supported by all major modern browser vendors including Safari, Google Chrome, Firefox, Opera, and others.

Thanks to WebRTC video streaming technology, you can embed the real-time video directly into your browser-based solution to create an engaging and interactive streaming experience for your audience without worrying about the delay.

Now video, and especially live streaming, are indispensable parts of our rapidly changing communication needs. WebRTC helps us meet these communication needs and increases our interaction with real-time communication.

This is particularly relevant as people are increasingly living farther apart from each other and need effective means of communication.

We present a series of free educational videos for you in collaboration with Tsahi Levent-Levi of BlogGeek.me.

This is the first part of the video series and provides information about WebRTC. If you are someone who likes to watch rather than read, start watching the video right away. 🙂

History of WebRTC

One of the most important challenges for the web is to enable human communication through voice and video: real-time communication or RTC for short.

Especially after Covid-19, we all need to communicate with each other regardless of distances and location. Hence, RTC is our new way to communicate.

Once upon a time, RTC was complex, requiring expensive audio and video technologies to be licensed or developed in-house. Integrating RTC technology with existing content, data, and services has been difficult and time-consuming, particularly on the web.

Google joined the video chat market with Gmail video chat in 2008. In 2011, Google introduced Hangouts and it was originally a feature of Google+, Hangouts became a stand-alone product in 2013.

Google loved RTC technologies. It acquired GIPS, a company that develops many components required for RTC, such as codecs and echo cancellation techniques.

Google open-sourced the technologies developed by GIPS and engaged with relevant standards bodies at the Internet Engineering Task Force (IETF) and World Wide Web Consortium (W3C) to ensure industry consensus.

May 2011 marked a historic moment when Ericsson built the first implementation of WebRTC, laying the foundations for the future of the communication industry.

The purpose of WebRTC was so clear, building a communication method for real-time, plugin-free video, audio, and data communication.

Many web services used RTC such as Skype, Facebook, and Hangouts but to use them, downloads, native apps, or plugins were needed. Downloading, installing, or updating plugins can be complex.

Errors commonly occur and the plugins don’t work properly. Using plugins may require some expensive licenses or technologies. It is also really hard to make people download a plugin.

WebRTC emerged based on these principles that its APIs should be open source, free, standardized, and built into web browsers.

WebRTC Snapshot

- Audio Codecs: Opus, iSAC, iLBC

- Video Codecs: H.264, H.265, VP8, VP9

- Playback Compatibility: All browsers

- Benefits: Real-time communication and browser-based

- Drawbacks: Scalability problem without a media server like Ant Media Server

- Latency: Sub-500-millisecond media delivery

WebRTC Components

We will use Client-A and Client-B as examples to explain WebRTC’s components below.

SDP (Session Description Protocol)

SDP is a simple string-based protocol and it is to share supported codecs between browsers.

In our example,

- Client-A creates its SDP ( called offer) and saves it as a local SDP then shares it with Client-B.

- Client-B receives the SDP of Client-A and saves it as a remote SDP.

- Client-B creates its SDP (called answer) and saves it as a local SDP then shares it with Client-A.

- Client-A receives the SDP of Client-B and saves it as a remote SDP.

The signaling server is responsible for these SDP transfers between peers.

Let assume Client-A may support H264, VP8, and VP9 codecs for video, Opus, and PCM codecs for audio. Client-B may support

For this case, Client-A and Client-B will use H264 and Opus for codecs. If there are no common codecs between peers, peer-to-peer communication cannot be established.

ICE (Interactivity Connection Establishment)

Interactive Connectivity Establishment (ICE) is used in problems where two nodes across the Internet must communicate as directly as possible, but the presence of NATs and Firewalls makes it difficult for nodes to communicate with each other.

It is a networking technique that makes use of STUN (Session Traversal Utilities for NAT) and TURN (Traversal Using Relays Around NAT) to establish a connection between two nodes that is as direct as possible.

WebRTC STUN Server (Session Traversal Utilities for NAT)

STUN Server is responsible to get all the addresses of a machine. For example, our computers generally have one local address in the 192.168.0.0 network and there is a second address we see when we connect to www.whatismyip.com, this IP address is actually the public IP address of our Internet gateway (modem, router, etc.), so let’s define STUN server: STUN servers let peers know theirs Public and Local IP addresses.

By the way, Google also provides a free STUN server (stun.l.google.com:19302).

WebRTC TURN Server (Traversal Using Relays around NAT)

TURN (Traversal Using Relays around NAT) is a protocol that assists in the traversal of network address translators (NAT) or firewalls for WebRTC applications. TURN Server allows clients to send and receive data through an intermediary server.

The TURN protocol is the extension to STUN. Sometimes, addresses taken from the STUN server cannot be used to establish for peer to peer connections between peers because of NAT/Firewall. In this case, data relays over TURN Server

In our example,

- Client-A finds out their local address and public Internet address by using the STUN server and sends these addresses to Client-B through Signalling Server. Each address received from the STUN server is an ICE candidate.

- Client-B does the same, gets local and public IP addresses from the STUN server, and sends these addresses to Client-A through Signalling Server.

- Client-A receives Client-B’s addresses and tries each IP address by sending special pings in order to create the connection with Client-B. If Client-A receives a response from any IP address, it puts that address in a list with its response time and other performance credentials. At last, Client-A chooses the best addresses according to its performance.

- Client-B does the same in order to connect to Client-A

RTP (Real-Time Protocol)

RTP is a mature protocol for transmitting real-time data on top of UDP. Audio and Video are transmitted with RTP in WebRTC. There is a sister protocol of RTP which name is RTCP (Real-time Control Protocol) which provides QoS in RTP communication. RTSP (Real-time Streaming Protocol) uses RTP protocol as well in data communication.

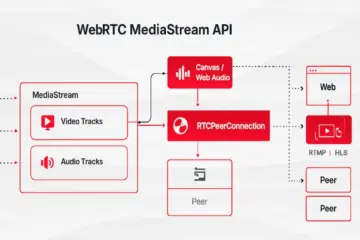

WebRTC Signaling Server

The last part is the Signalling Server which is not defined in WebRTC. As mentioned above, Signaling Server is used to send SDP strings and ICE Candidates between Client-A and Client-B. The Signaling Server also decides which peers get connected to each other. WebSocket technology is the preferred way to Signalling Servers for communication.

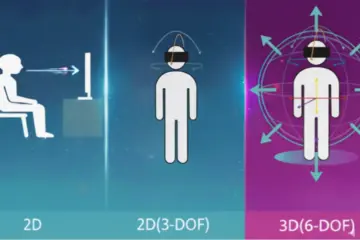

WebRTC Video Streaming

Video streaming enables data from a video file to be continuously delivered via a network or the internet to a viewer’s device.

If a video file is downloaded, a copy of the entire file is saved onto a device’s hard drive, and the video cannot play until the entire file finishes downloading. If it’s streamed instead, the browser plays the video without actually copying and saving it. The video loads a little bit at a time instead of the entire file loading at once, and the information that the browser loads is not saved locally.

However, latency occurs during the live stream of the video. Streaming latency is basically the delay between the camera capturing an event and the event being displayed on viewers’ devices. For some usage scenarios, this latency should be kept to a minimum. Here’s where WebRTC technology saves our lives. 🙂

7 Advantages of WebRTC Video Streaming

- Ultra-Low Latency Video Streaming – Latency is 0.5 seconds

- Platform and device independence

- Advanced voice and video quality

- Secure voice and video

- Easy to scale

- Adaptive to network conditions

- WebRTC Data Channels

How Does WebRTC Work?

- WebRTC sends data directly across browsers – it is called “P2P”

- It can send audio, video, or data in real-time

- It needs to use NAT traversal mechanisms for browsers to reach each other

- P2P needs to be gone through a relay server (TURN)

- With WebRTC you need to think about signaling and media. They are different from each other.

- One of the main features of the tech was that it allowed peer-to-peer (browser-to-browser) communication with little intervention of a server, which is usually used only for signaling.

- It’s possible to hold video calls with multiple participants using peer-to-peer communication. Media server helps reduce the number of streams a client needs to send, usually to one, and can even reduce the number of streams a client needs to receive.

- Servers you’ll need in a WebRTC product:

- Signaling server

- STUN/TURN servers

- Media servers (It is up to your use case)

Reasons to Choose WebRTC

- WebRTC is open source

- It is completely free for commercial or private use. Even that alone is enough. 🙂 Since it is constantly developing, it will meet your needs for many years.

- It is widely supported

- Since it is supported by almost all browsers, WebRTC now is used in every usage scenario you can imagine

- WebRTC is also available for mobile applications

- It is currently used in many mobile applications. SDKs are available for both mobile and embedded environments so there is no limitation!

- Don’t think it is only for voice or video chat

- You can use WebRTC not only for peer-to-peer communication but also to create a group calling service, build a video conferencing solution, add the recording to it.

Is WebRTC Secure?

There are several ways a real-time communication application or plug-in can compromise security. For example:

- Unencrypted media or data can be intercepted while being transmitted between browsers or between a browser and a server.

- Video or audio may be recorded and distributed without the user’s knowledge.

- Malware or viruses can be installed alongside a seemingly harmless plug-in or application.

How does WebRTC solves these security issues?

- WebRTC uses secure protocols, such as DTLS and SRTP.

- Encryption is mandatory for all WebRTC components, including signaling mechanisms.

- WebRTC is not a plugin. Its components run in the browser sandbox and not in a separate process. Components do not require separate installation and are updated whenever the browser is updated.

- Camera and microphone access must be granted explicitly and, when the camera or microphone are running, this is clearly shown by the user interface.

WebRTC for iOS

Ant Media’s WebRTC iOS SDK lets you build your own iOS application that can publish and play WebRTC broadcasts with just a few lines of code. In this doc, we’re covering the following topics.

- Run the Sample WebRTC iOS app

- Publish Stream from your iPhone

- Play Stream on your iPhone

- P2P Communication with your iPhone

- Develop a WebRTC iOS app

- How to Publish

- How to Play

- How to use DataChannel

WebRTC for Android

You can use WebRTC facilities in the Android Platform with the help of Ant Media Server’s Native WebRTC Android SDK. In this blog post, features of Android SDK will be presented with a sample Android project which comes bundled with the SDK.

WebRTC Market Forecast (2020-2027)

According to research, the global market was valued at $3.2 billion in 2020 and is projected to reach $40.6 billion by 2027, growing at a CAGR of 43.4% from 2020 to 2027. The global demand for WebRTC is expected to rise, largely due to a rise in its application in end-use sectors such as telecom, IT, e-commerce, and others.

What is a WebRTC Server?

A server that provides the functionality required for the proper connection of WebRTC sessions in the cloud or self-hosting in order to make your WebRTC projects work is called a “WebRTC server”.

A server that provides the functionality required for the proper connection of WebRTC sessions in the cloud or self-hosting in order to make your WebRTC projects work is called a “WebRTC server”.

Types of WebRTC Servers?

There are 4 types of WebRTC Servers:

- WebRTC application servers

- WebRTC signaling servers

- NAT traversal servers for WebRTC

- WebRTC media servers

WebRTC Application Servers

Webrtc Application servers are basically, application and website hosting servers. Yes, that’s all.

WebRTC Signaling Servers

WebRTC signaling server is a server that manages the connections between devices.It is not concerned with the media traffic itself, its focus is on signaling. This includes enabling one user to find another in the network, negotiating the connection itself, resetting the connection if needed, and closing it down.

NAT Traversal Servers For WebRTC

Network address translation traversal is a computer networking technique of establishing and maintaining Internet protocol connections across gateways that implement network address translation (NAT).

NAT traversal techniques are required for many network applications, such as peer-to-peer file sharing and Voice over IP.

WebRTC Media Servers

A WebRTC media server is a type of “multimedia middleware” (located in the middle of the communicating peers) through which media traffic passes as it passes from source to destination. Media servers can offer different types, including processing media streams and group communications (distributing media streams created by a peer between several receivers i.e. Multi-Conference Unit, acting as an MCU), mixing (converting several incoming streams to a single composite stream), transcoding (adapting codecs and formats between incompatible clients), recording (permanent storage of media exchanged between peers), etc.

Many popular WebRTC services are hosted today on AWS, Google Cloud, Microsoft Azure, and Digital Ocean servers. You can embed your WebRTC media to any WordPress, PHP, or other website.

WebRTC Samples

With Ant Media’s live demo, you can immediately test WebRTC live stream and experience the real-time latency. Experience now!

WebRTC in Ant Media Server

Ant Media provides a ready-to-use, highly scalable real-time video streaming solution for live video streaming needs.

Based on customer requirements and preferences, it enables a live video streaming solution to be deployed easily and quickly on-premises or on public cloud networks such as Alibaba Cloud, AWS, DigitalOcean and Azure.

Ant Media Server supports most of the common media streaming protocols like RTMP, HLS, and of course WebRTC. Actually, Ant Media Server is one of the best WebRTC servers on the planet.

Ant Media Server provides all of the features listed above and is able to provide WebRTC publishing latency as low as ~0.2 seconds. If you like to experience Real-Time Video Streaming, then try Ant Media Server for free now.

In this blog post, we looked for an answer to the question of what is WebRTC and discussed it in detail. We hope we were able to help you with this blog post. If you have any questions please feel free to contact us via contact@antmedia.io.