Who wouldn’t want to stream high-quality media cheaper and more simply, with low latency? CMAF makes this scenario that is everyone’s dream possible. It is the result of a concerted effort by tech giants to increase efficiency and reduce latency.

The need for low latency solutions started to increase with Adobe’s abandonment of support for Flash player. This hastened the decline of RTMP. Now, CMAF is one of the best streaming formats. providing low latency for industry-wide needs. In this article, you will find detailed information about CMAF, which has become increasingly popular in the streaming world.

Table of Contents

What Is CMAF (Common Media Application Format)?

CMAF stands for Common Media Application Format. It is an extensible format for the encoding and packaging of segmented media objects for delivery and decoding on end-user devices in adaptive media streaming. CMAF is basically a new format to simplify the delivery of HTTP-based streaming media. It is an emerging standard to help reduce cost, complexity, and reducing latency by providing around 3-5 secs latency in streaming. CMAF simplifies the delivery of media to playback devices by working with both the HLS and DASH protocols to package data under a uniform transport container file.

It is worth mentioning one more time. CMAF itself is not a protocol, but a format that contains a set of containers and standards for single-approach video streaming that works with protocols such as HLS and MPEG-DASH.

CMAF Snapshot

| Purpose: | Goals: | |

|

|

The Emergence of CMAF

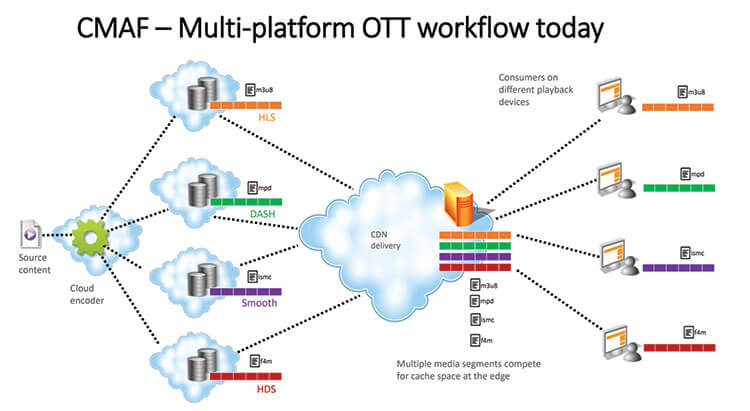

As you know, Adobe stopped supporting Flash Player at the end of 2020. As a result of the declining status of RTMP, other HTTP-based (Hypertext Transfer Protocol) technologies for adaptive bitrate streaming have emerged. However, different streaming standards require different file containers. Such as while MPEG-DASH uses .mp4 containers, HLS streams are delivered in .ts format.

Therefore, every broadcaster who wants to reach a wider audience must encode and store the same video file twice, because encryption creates completely different groups of files.

These two versions of the same video stream should be made either in advance or instantly. Both of these procedures require additional storage and processing costs.

Apple and Microsoft suggested Moving Pictures Expert Group create a new uniform standard called Common Media Application Format (CMAF) to reduce complexity when transmitting video online.

Time Line:

- February 2016: Apple and Microsoft proposed CMAF to the Moving Pictures Expert Group (MPEG).

- June 2016: Apple announced support of the fMP4 format.

- July 2017: Specifications finalized.

- January 2018: CMAF standard officially published.

Why Do You Need CMAF?

Live video streaming requires many technical processes. And a variety of codecs, media formats, protocols, and more add to the complexity. Above all, compressing files in different media formats makes this already difficult and complex process slower and more expensive.

As we said above, to reach a wider audience base, broadcasters need to create multiple copies of each stream file in different file containers.

So these same files reduce productivity by nearly double the cost of packaging, storing, and competing with each other on CDN servers.

Let’s look at what Akamai said about this:

“These same files, although representing the same content, cost twice as much to package, twice as much to store on origin, and compete with each other on Akamai edge caches for space, thereby reducing the efficiency with which they can be delivered.”

The importance of CMAF comes into play here. As a standard streaming format across all platforms, it helps us with single-approach encoding, packaging and storage. So,

Shortly, With CMAF streaming, you have one set of audio/video files in a fragmented MP4 format with very lightweight manifest files for all four adaptive bitrate (ABR) formats. Hypothetically, this cuts encoding and storage costs by 75% and makes your caching much more efficient.

CMAF replaces these four sets of files with a single set of audio/video MP4 files and four adaptive bitrate manifests. Source: Streaming Media

How Does CMAF Work?

Before CMAF, Apple’s HLS protocol used the MPEG transport stream container format or .ts (MPEG-TS). Other HTTP-based protocols such as DASH used the fragmented MP4 format or .mp4 (fMP4). Microsoft and Apple have agreed to reach a wider audience through the HLS and DASH protocols using standardized transport containers in the form of fragmented MP4. The purpose is to distribute content using mp4 only. CMAF has 2 encoding ways, chunked encoding and chunked transfer encoding to lower the latency. This process makes it possible to break the video into smaller chunks of a set duration, which can then be immediately published upon encoding. That way, near-real-time delivery (3-5 seconds) can take place while later chunks are still processing.

Advantages of CMAF Streaming

CMAF streaming technology is one of the easiest ways to reduce streaming latency and complexity of streaming. CMAF streaming helps us with;

- Cutting costs

- Minimizing workflow complexity

- Reducing latency

CMAF and Other Streaming Protocols

Now let’s compare different live streaming protocols with CMAF.

CMAF vs RTMP

RTMP streaming protocol, Transmission Control Protocol-based technology, was developed by Macromedia for streaming audio, video, and data over the Internet, between a Flash player and a server. Macromedia was purchased by its rival Adobe Inc. on December 3, 2005. RTMP stands for Real-Time Messaging Protocol and it was once the most popular live streaming protocol.

RTMP Streaming Protocol Technical Specifications

- Audio Codecs: AAC, AAC-LC, HE-AAC+ v1 & v2, MP3, Speex

- Video Codecs: H.264, VP8, VP6, Sorenson Spark®, Screen Video v1 & v2

- Playback Compatibility: Not widely supported anymore

- Limited to Flash Player, Adobe AIR, RTMP-compatible players

- No longer accepted by iOS, Android, most browsers, and most embeddable players

- Benefits: Low latency and minimal buffering

- Drawbacks: Not optimized for quality of experience or scalability

- Latency: 5 seconds

- Variant Formats: RTMPT (tunneled through HTTP), RTMPE (encrypted), RTMPTE (tunneled and encrypted), RTMPS (encrypted over SSL), RTMFP (layered over UDP instead of TCP)

CMAF vs HLS

HLS stands for HTTP Live Streaming. HLS is an adaptive HTTP-based protocol used for transporting video and audio data from media servers to the end user’s device.

HLS was created by Apple in 2009. Apple announced the HLS at about the same time as the legendary device iPhone 3. Earlier generations of iPhone 3 had live streaming playback problems, and Apple wanted to fix this problem with HLS.

The biggest advantage of HLS is its wide support area. HLS is currently the most used streaming protocol. However, the HLS protocol offers a latency of 5-20 seconds.

HLS’s adaptive-bitrate capabilities ensure that broadcasters deliver the optimal user experience and minimize buffering events by adapting the video quality to the viewer’s device and connection.

It may make more sense to use HLS in streams where video quality is important but latency is not that important.

Features of HLS video streaming protocol

- Closed captions

- Fast forward and rewind

- Alternate audio and video

- Fallback alternatives

- Timed metadata

- Ad insertion

- Content protection

HLS Technical Specifications

- Audio Codecs: AAC-LC, HE-AAC+ v1 & v2, xHE-AAC, Apple Lossless, FLAC

- Video Codecs: H.265, H.264

- Playback Compatibility: It was created for iOS devices. But now all Google Chrome browsers; Android, Linux, Microsoft, and macOS devices; several set-top boxes, smart TVs, and other players support HLS. It is now a universal protocol.

- Benefits: Supports adaptive bitrate, reliable, and widely supported.

- Drawbacks: Video quality and viewer experience are prioritized over latency.

- Latency: HLS allows us to have 5-20 seconds latency, but the Low-Latency HLS extension has now been incorporated as a feature set of HLS, promising to deliver sub-2-second latency.

CMAF vs WebRTC

In Other words low latency vs ultra-low latency. Ant Media Server right now supports both LL(CMAF) and ULL(WebRTC). Here is some basic information about these technologies. CMAF provides low latency(3-5 secs) in live streaming, on the other hand, WebRTC provides Ultra-Low Latency(0.5 secs) in live streaming. Then which one is good for your low latency streaming project, CMAF streaming or WebRTC streaming? Let’s lean-rn more about WebRTC.

WebRTC stands for web real-time communications. WebRTC is a very exciting, powerful, and highly disruptive cutting-edge technology and streaming protocol.

WebRTC is HTML5 compatible and you can use it to add real-time media communications directly between browsers and devices. And you can do that without the need for any prerequisite of plugins to be installed in the browser. Webrtc is progressively becoming supported by all major modern browser vendors including Safari, Google Chrome, Firefox, Opera, and others.

Thanks to WebRTC video streaming technology, you can embed the real-time video directly into your browser-based solution to create an engaging and interactive streaming experience for your audience without worrying about the delay. WebRTC video streaming is just changing the way of engagement in the new normal.

WebRTC Features

- Ultra-Low Latency Video Streaming – Latency is 0.5 seconds

- Platform and device independence

- Advanced voice and video quality

- Secure voice and video

- Easy to scale

- Adaptive to network conditions

- WebRTC Data Channels

Which Streaming Protocol To Use?

Every technology has advantages and disadvantages. We talked enough about CMAF and you can learn more about WebRTC with this link. You can pick the right one according to your use case. CMAF is good if there is no interactivity between broadcasters and viewers. It’s easier to scale with CMAF with CDNs. It’s not much affected by instant network fluctuations because latency is about 3-5 seconds. On the other hand, HLS is good if you don’t care about latency but stream quality. It’s good to use WebRTC if there is interactivity between broadcasters and viewers. You need to manage the edge WebRTC servers to scale. It’s affected by instant network fluctuations(jitter, congestion) because it’s about 0.5 secs latency.

CMAF in a Nutshell

CMAF will streamline server efficiency to serve most endpoints. It is an emerging standard to help reduce cost and complexity and reduce latency by providing around 3-5 secs latency in streaming.

3 advantages of CMAF streaming:

- Cutting costs

- Minimizing workflow complexity

- Reducing latency

This is where Ant Media comes in. Ant Media Server supports video distribution in various formats, including CMAF, WebRTC, HLS, RTMP(ingest), SRT, and RTSP. You can scale your business with Ant Media Server.

Try Ant Media Server for Free

Explore Ant Media Server now to provide viewers with a unique experience.

Try Ant Media Server for free with its full features including Flutter and other WebRTC SDKs.

We wanted to present to you a detailed article. We hope it was useful for you. We are always here for any questions you may have. You can reach us at contact@antmedia.io.

Useful Links

WebRTC SDKs’ guides for Android, iOS, React Native, and Flutter.

You would want to check Future of Ultra-Low Latency Streaming Market, Adventure Continues: CMAF is available in Ant Media Server v2.2, An Overview of WebRTC Statistics.